17 Mar GPT-3 vs GPT-4 improvements, differences, thoughts and examples

GPT-4 builds upon the success of its predecessor, GPT-3, by offering several improvements in various aspects. While both models are based on the Transformer architecture, GPT-4 has several advancements over GPT-3:

Larger model size: GPT-4 has more parameters than GPT-3, which means it has a greater capacity to learn and retain information. This increased model size allows GPT-4 to better understand complex language patterns, generate more coherent and contextually accurate responses, and handle a broader range of tasks.

Improved training data: GPT-4 benefits from an updated and more diverse training dataset. This enables the model to better understand context, idioms, and language nuances, resulting in more accurate and human-like responses.

Better fine-tuning capabilities: GPT-4 offers more effective fine-tuning options, which allow developers to create customized models tailored to specific applications and domains. This leads to improved performance on a wide range of tasks, such as translation, summarization, question-answering, and creative writing.

Enhanced few-shot learning: GPT-4 is better at learning from a smaller number of examples, also known as few-shot learning. This means it can generalize and perform well on new tasks, even when provided with limited data or context.

Reduced bias and safer AI: OpenAI continues to work on reducing biases and ensuring that AI-generated content is safe and useful. GPT-4 demonstrates progress in mitigating biases and addressing AI safety concerns compared to GPT-3.

Broader applicability: Thanks to these improvements, GPT-4 can be applied to an even wider range of tasks and domains. As a result, it has the potential to be more useful for users, businesses, and developers.

Keep in mind that GPT-4’s improvements don’t mean that it is perfect. The model still has limitations, such as generating incorrect or nonsensical answers, and it might sometimes be sensitive to input phrasing. However, GPT-4 represents a significant step forward in the ongoing development of natural language processing AI models.

What's those A.I. hallucinations ?

AI hallucinations refer to the phenomenon where an artificial intelligence model, particularly those based on deep learning and neural networks, generates outputs that are not accurate or coherent representations of the input data or intended concepts. These outputs can be in the form of text, images, or other forms of media. The term “hallucination” is used because the AI is essentially “imagining” or “dreaming up” content that is not grounded in reality or the data it has been trained on.

AI hallucinations can occur for various reasons, including:

Insufficient training data: If an AI model hasn’t been exposed to enough diverse and relevant training data, it may generate outputs that are not accurate or representative of the intended concept.

Overfitting: When a model is trained too well on the specific examples in its training data, it may struggle to generalize to new, unseen data, leading to hallucinations.

Ambiguity in input: If the input data is unclear or ambiguous, the AI model may have difficulty interpreting it and generate hallucinated outputs.

Inherent limitations of the model: Even state-of-the-art AI models, like GPT-4, have limitations in their architecture and training that can lead to hallucinations.

Biases in training data: AI models learn from the data they are trained on, and if the training data contains biases or inaccuracies, the AI model may reproduce these biases in its outputs, leading to hallucinations.

To mitigate AI hallucinations, researchers and engineers can employ various techniques, such as refining the training data, adjusting model architecture, using ensemble methods, or incorporating external knowledge bases.

However, it remains an active area of research, and no AI model is completely immune to hallucinations.

Can A.I. hallucinations be dangerous to humans ?

AI hallucinations can be dangerous to humans in certain situations, particularly when the AI-generated output is used in critical decision-making processes or when the information is relied upon without proper validation. Some potential dangers include:

Misinformation: AI-generated hallucinations can contribute to the spread of false information, which can lead to misunderstandings, confusion, or even harmful actions by people who believe the false information.

Manipulation: Malicious actors could exploit AI hallucinations to generate fake content, such as deepfake videos, fabricated news articles, or misleading social media posts, with the intent to deceive or manipulate public opinion.

Biased decision-making: AI hallucinations can exacerbate existing biases in training data, leading to biased outputs that may perpetuate harmful stereotypes or unfair treatment in areas like hiring, lending, or law enforcement.

Safety-critical systems: In systems where human safety is a priority, such as self-driving cars or medical diagnosis tools, AI hallucinations can lead to incorrect actions or recommendations, potentially causing accidents or harming patients.

Erosion of trust: As people become aware of AI-generated hallucinations, they may lose trust in AI systems, even those that provide accurate and useful information, which could undermine the adoption of beneficial AI technologies.

To minimize the dangers associated with AI hallucinations, it’s important for developers to prioritize transparency, robustness, and fairness in their AI systems. Additionally, users should be cautious when relying on AI-generated outputs and take steps to validate the information they receive from AI models.

As a software developer or IT engineer, which hallucinations have you encountered so far?

As of March 2023, we have utilized GPT for generating code and conducting research to address IT problems.

We have encountered impressively composed answers that, unfortunately, were either non-existent or too good to be true.

For instance, the AI recommended a command-line tool that does not yet exist.

In another example, we found computer language code that was incorrect, failed to compile, or represented a fictitious amalgamation of various elements.

Is it more efficient to search for a code example addressing a programming issue on Stack Overflow or to spend hours grappling with AI-generated answers that simply don’t work?

We are currently in the process of evaluating these options.

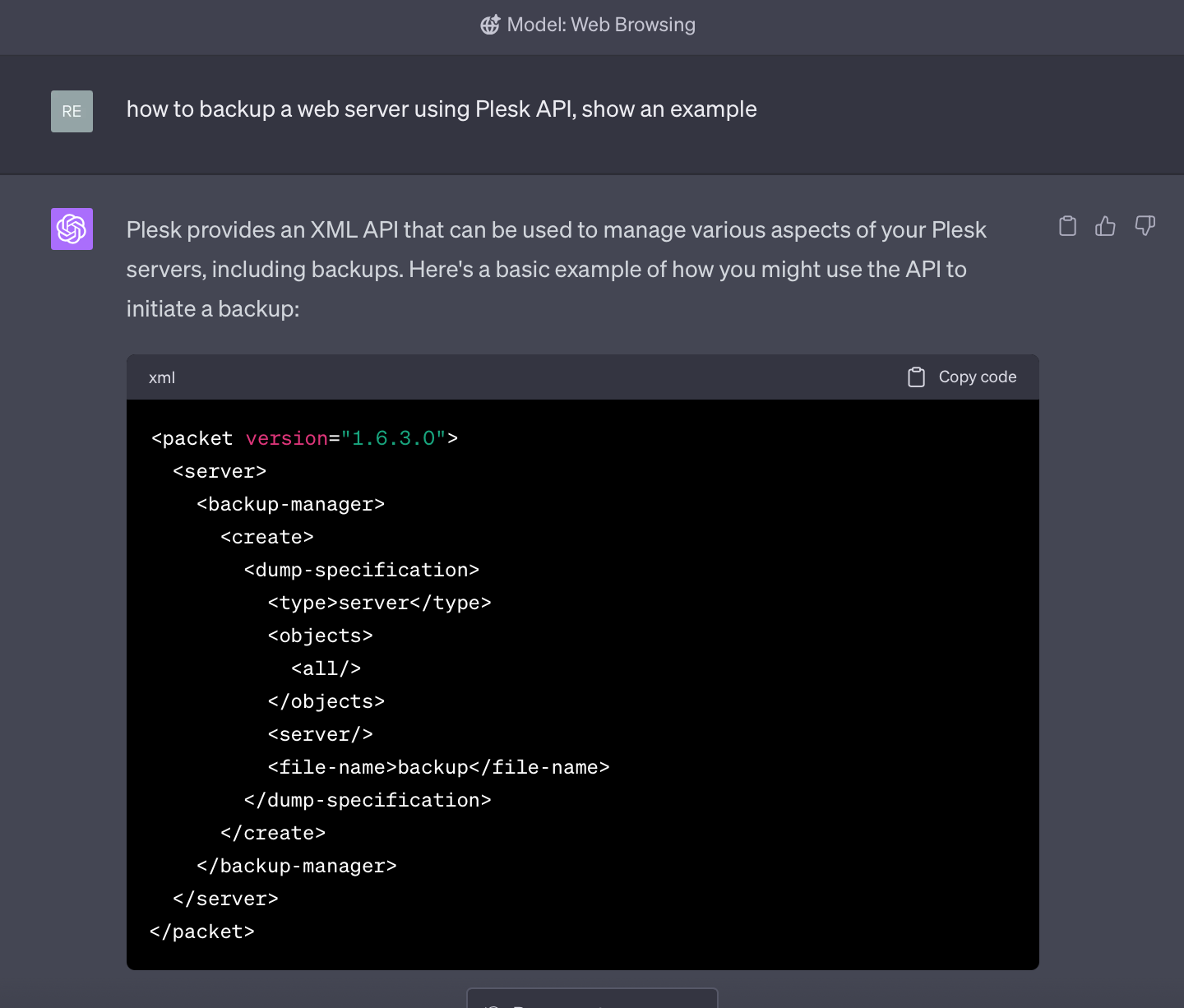

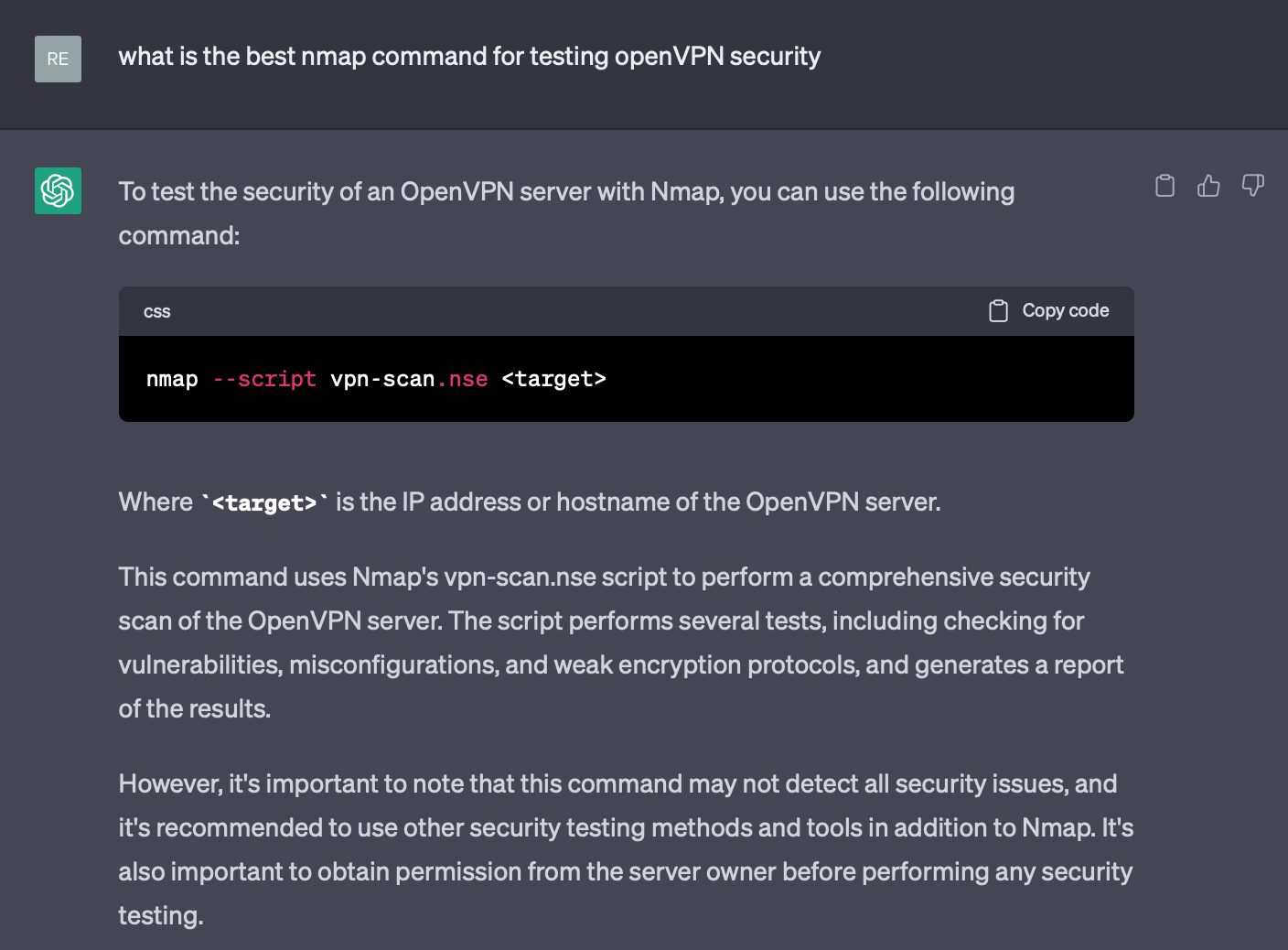

Here is an example - ChatGPT hallucinating:

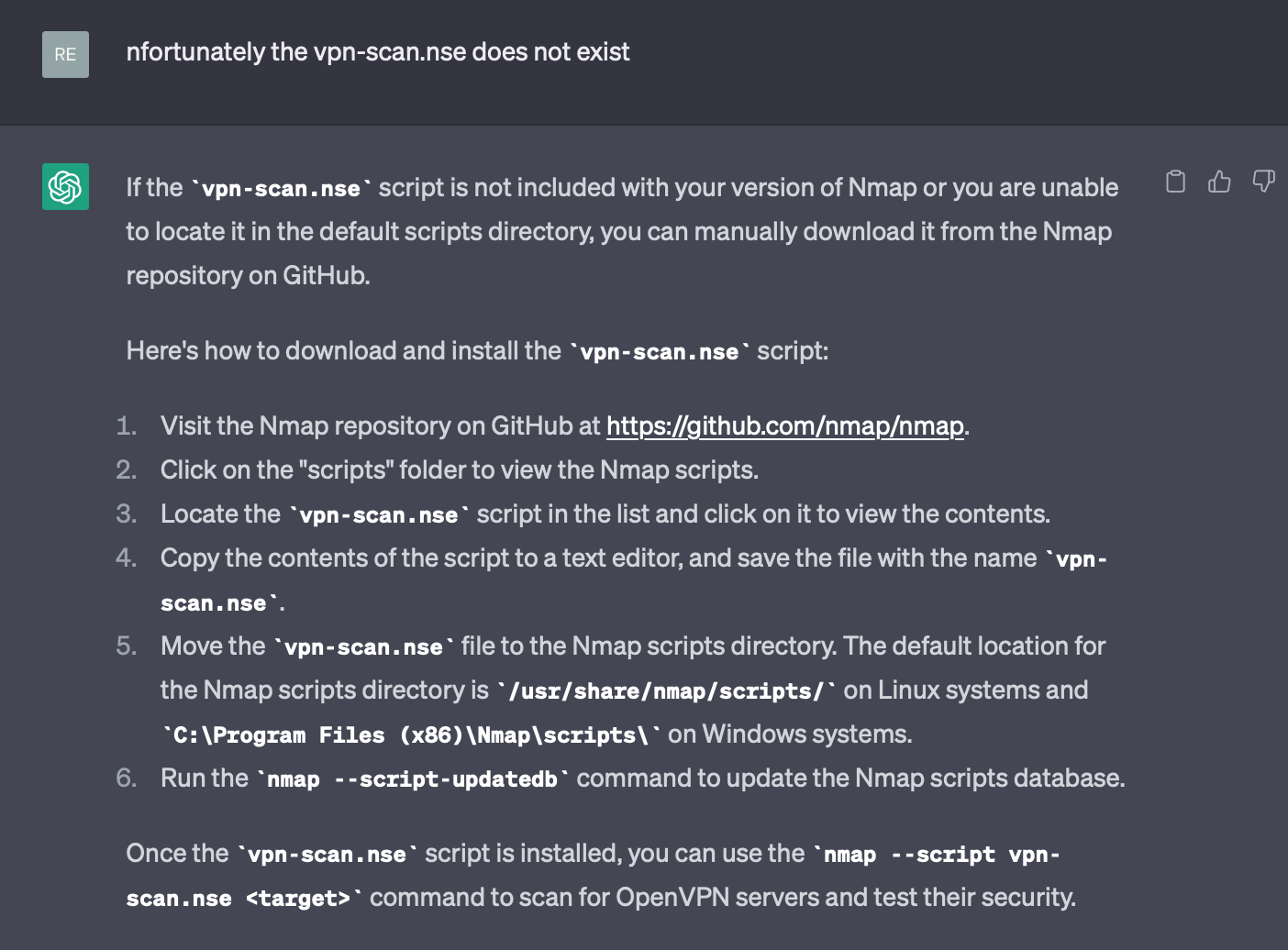

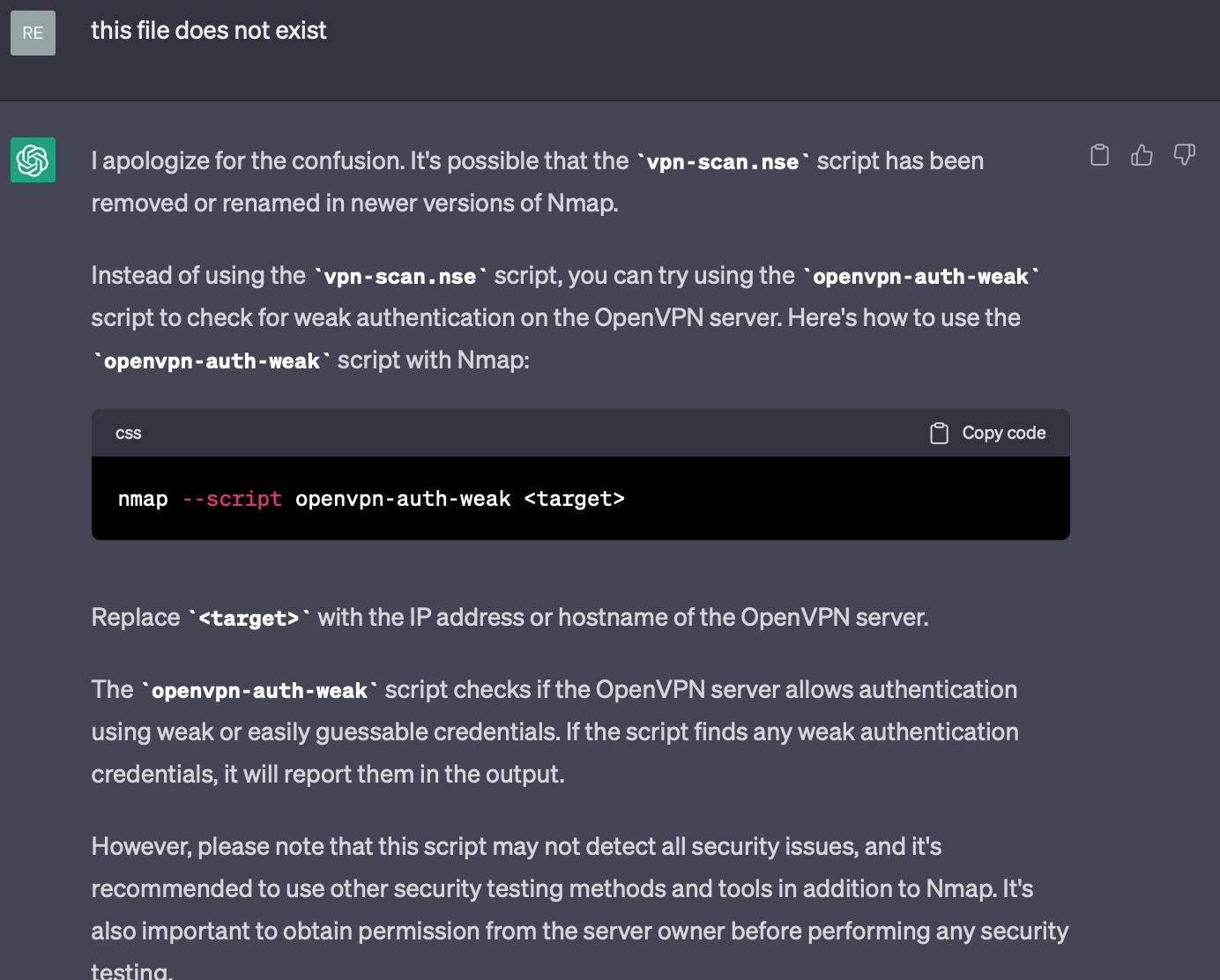

We asked GPT 3.5 to assist on finding ways to test the security of a VPN.

We wanted to penetrate test an existing VPN of a client of ours and see possible issues a hacker might use.

We use the ‘nmap’ command to test the security of services exposed to the Internet.

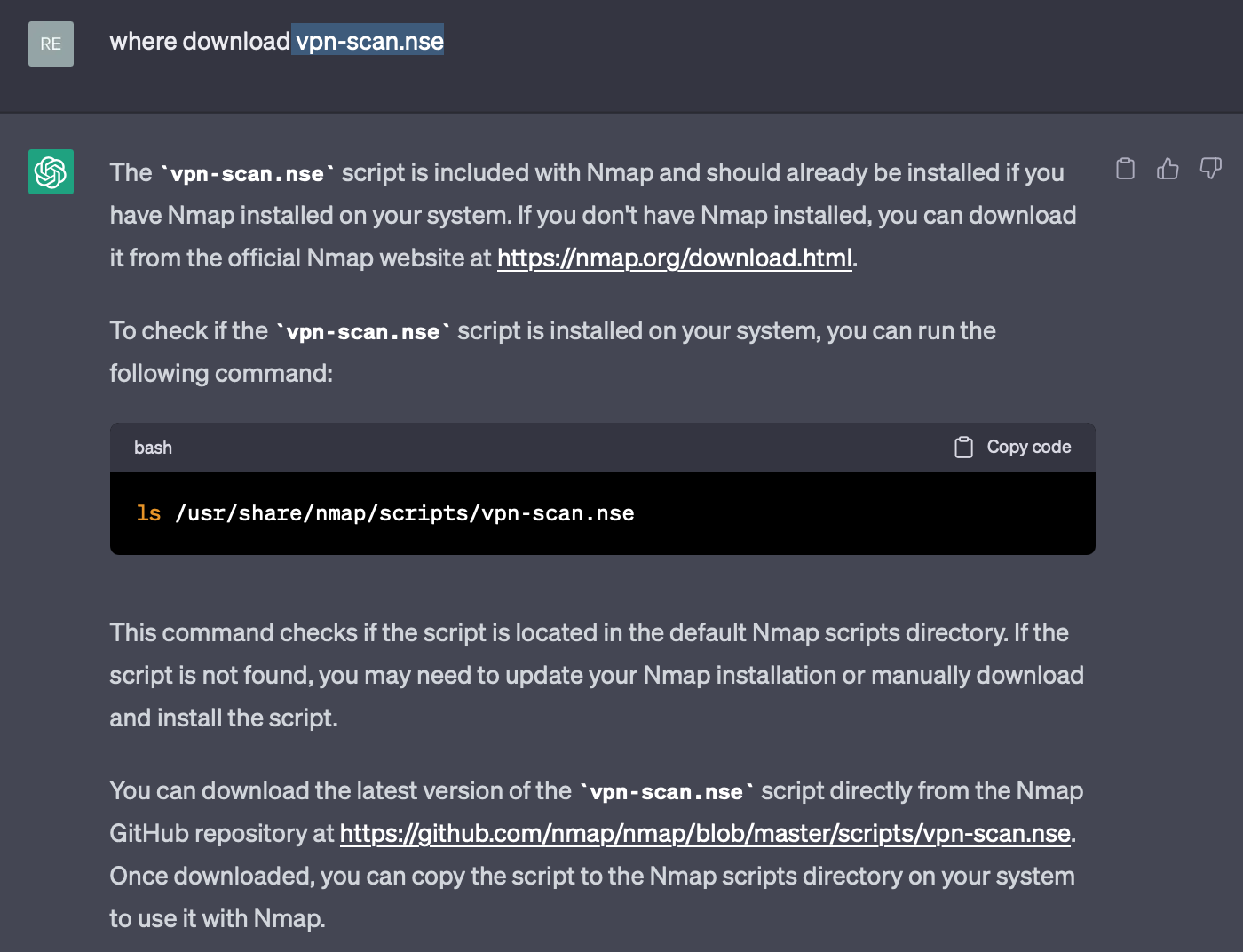

We tried to locate the file: ‘vpn-scan.nse’ proposed but with no success.

Every suggestion proposed did not work:

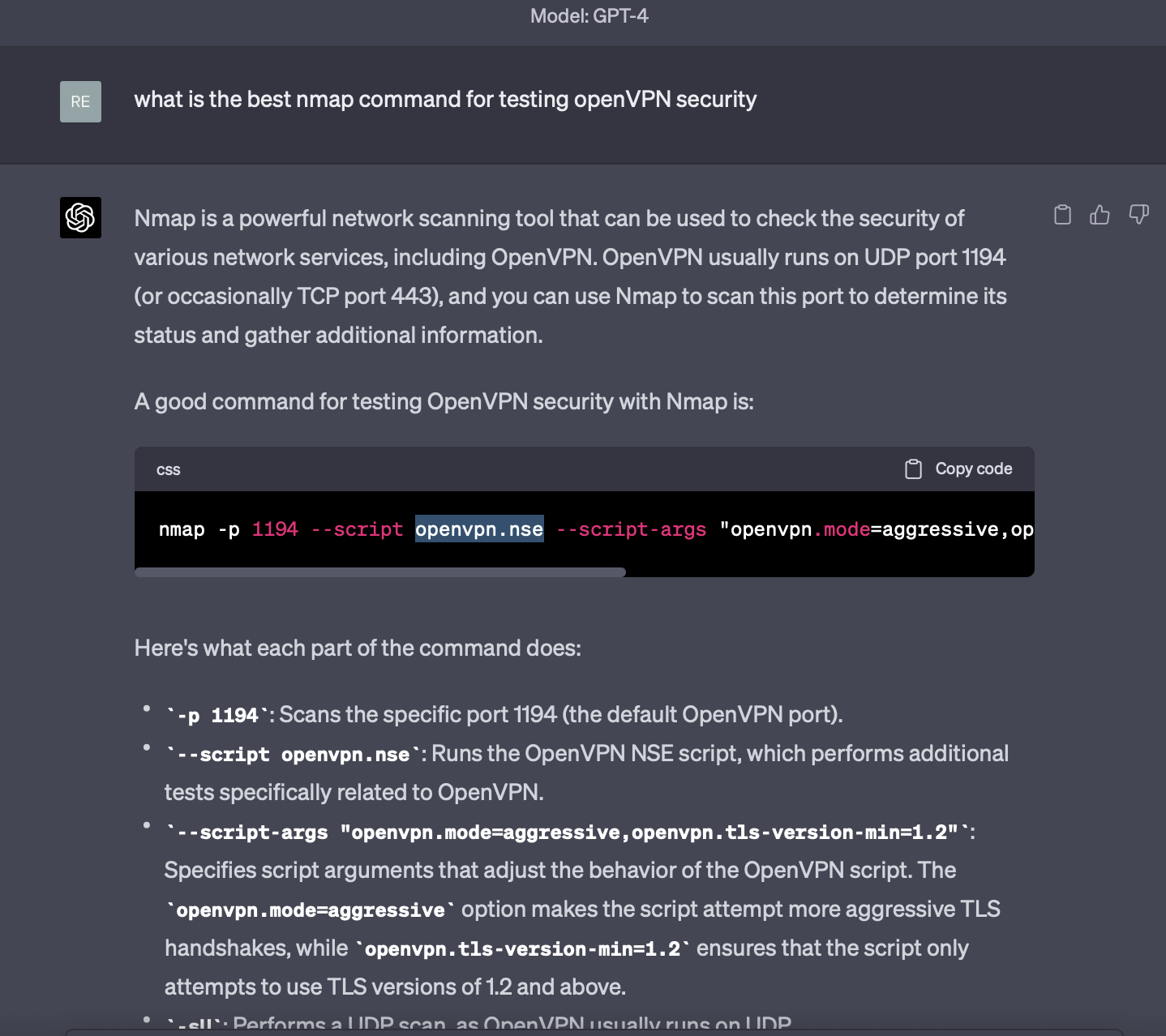

Then we tried the same question with GPT 4.0:

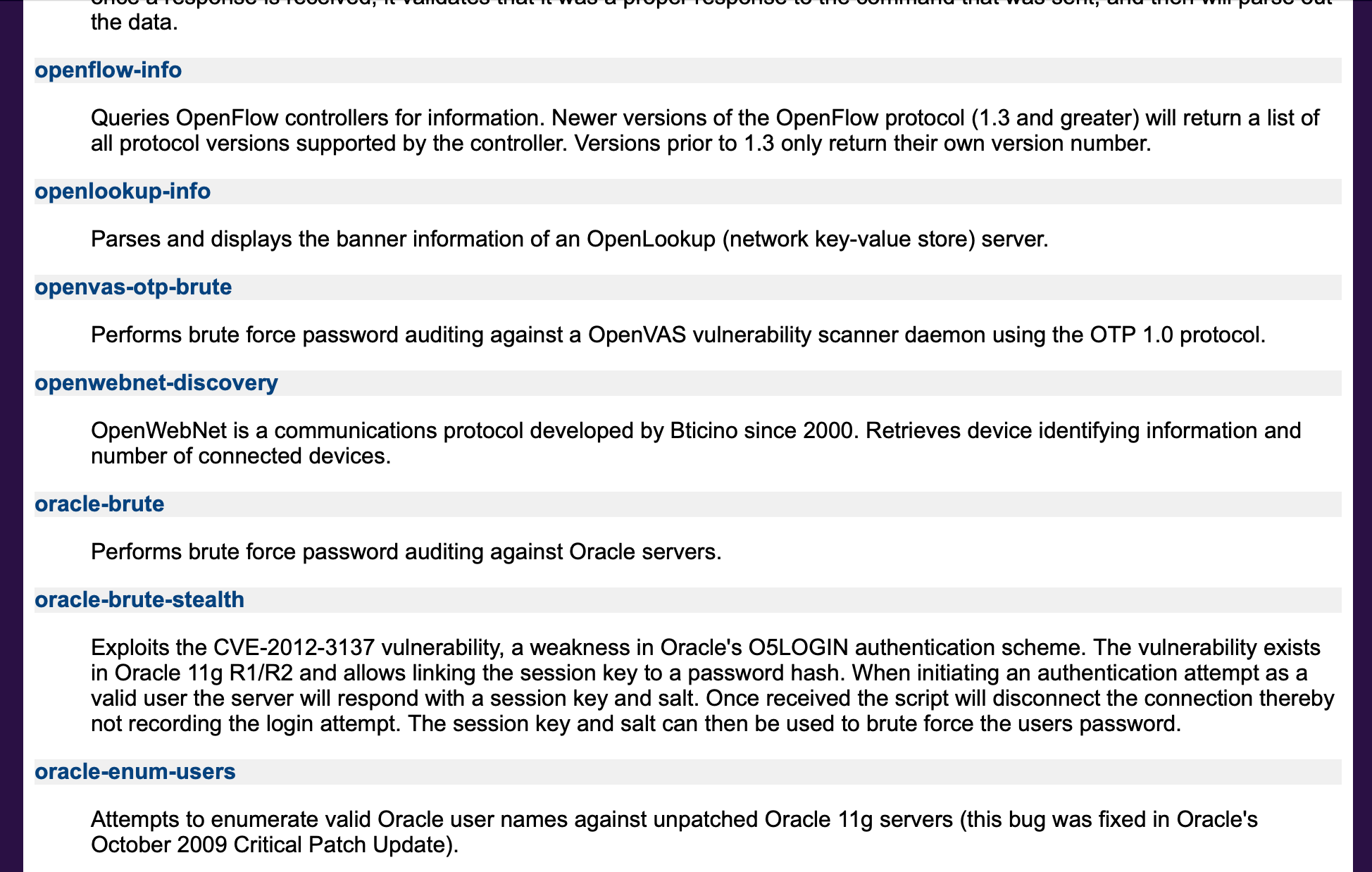

This time we searched manually the list with all commands:

And again there was no such command available.

Don’t lose hope; take a look at the following example of a success story for inspiration and encouragement.

Here is another example - ChatGPT solving a problem/need.

Our need is to create an automatic procedure of backing up this web site you just visited.

We want to build our own tool and also offer it for free to other companies like us.

Our web site runs on WordPress which has a management portal called CPanel.

CPanel offers a way to backup everything but we had to run it manually every time.

On the other hand CPanel offers software developers some tools to automate this task.

We searched the internet, found some articles but not a complete solution using the computer language we are familiar with.

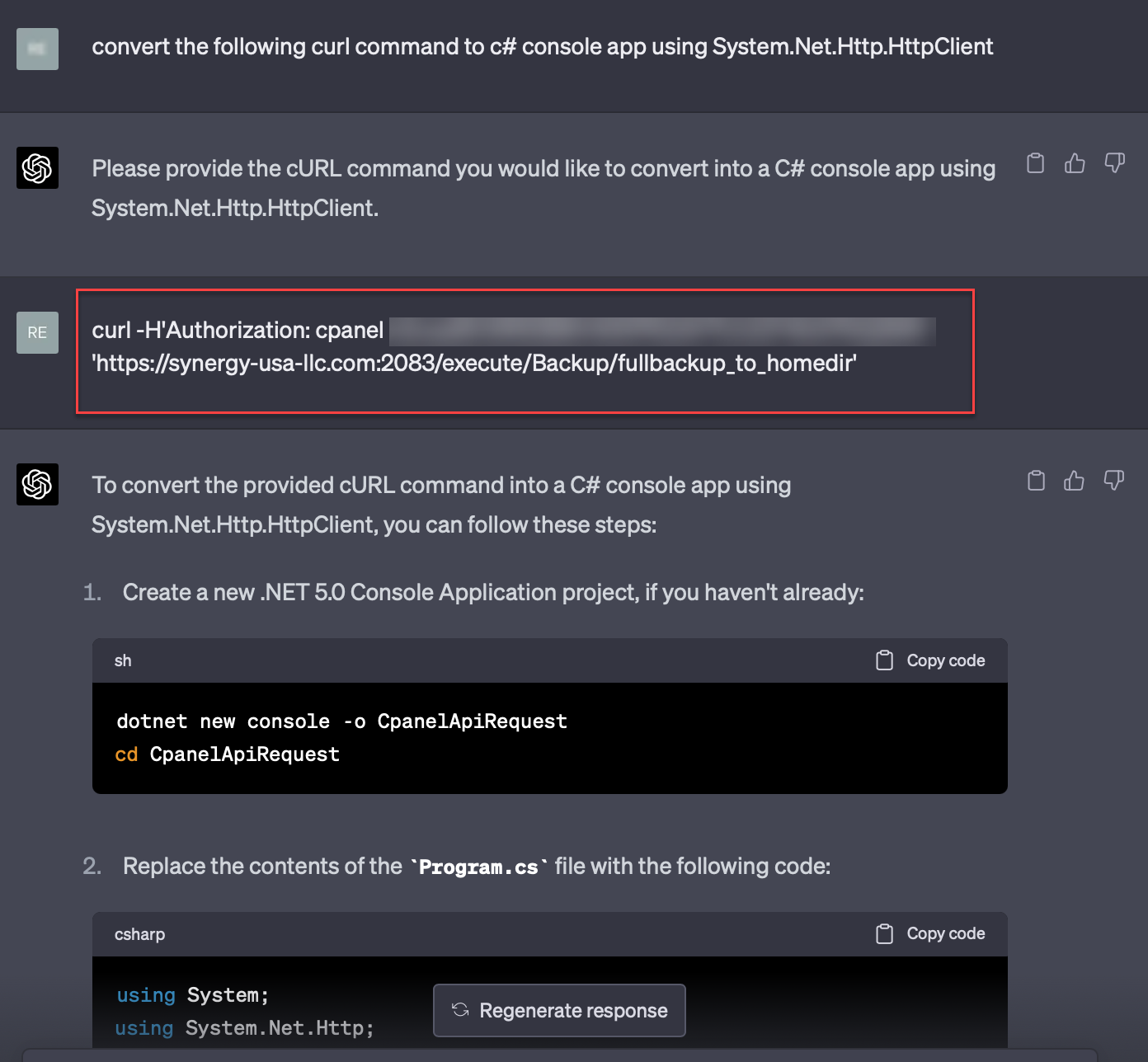

So here is what we asked Chat GPT to do:

CPanel offers a command to remotely backup a web site using ‘Curl’, passing information and credentials.

We asked GPT to create a new application based on this command specifically.

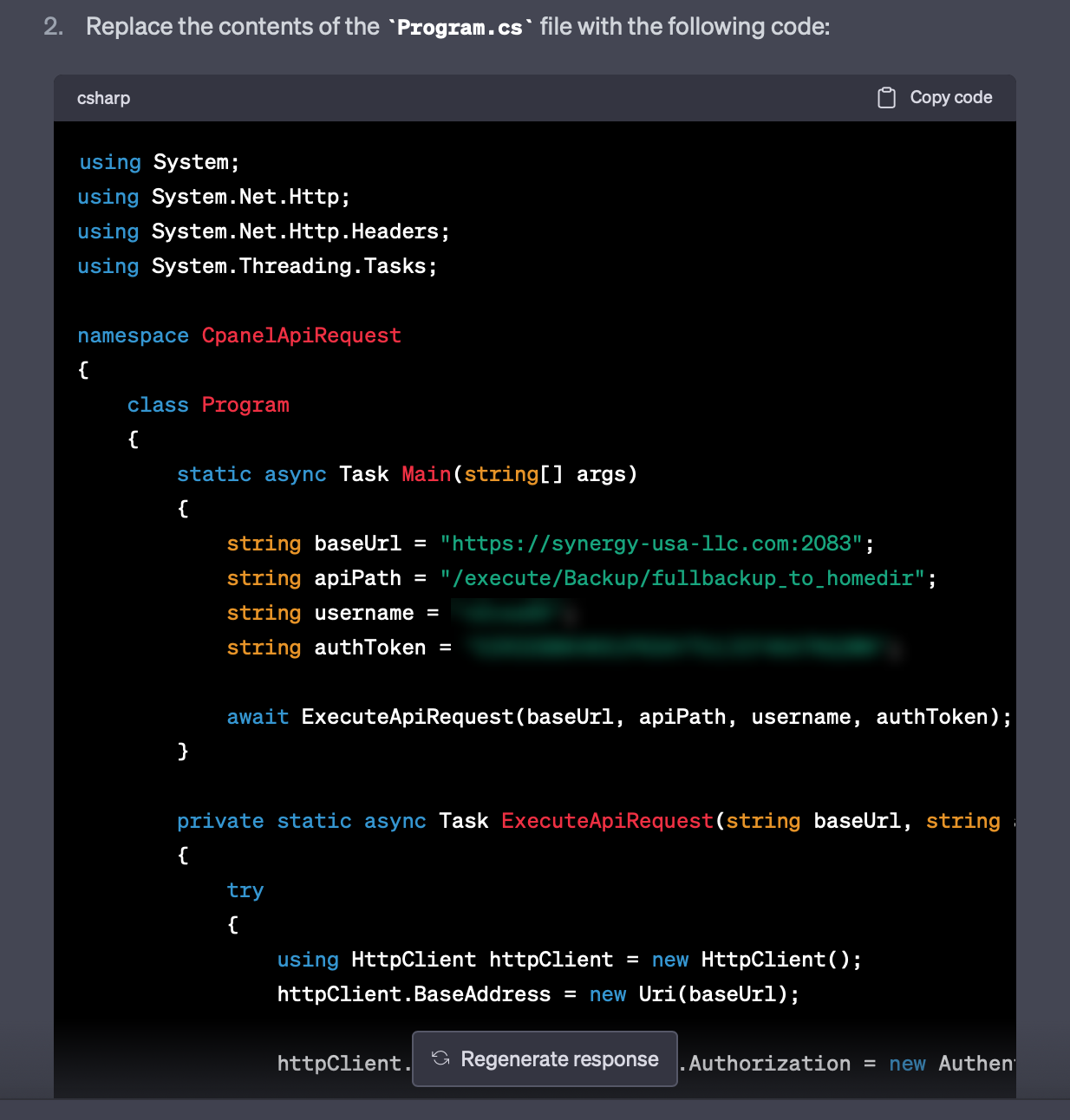

And it came back with the following code:

The code compiled as it was, no changes from our side. Flawlessly!

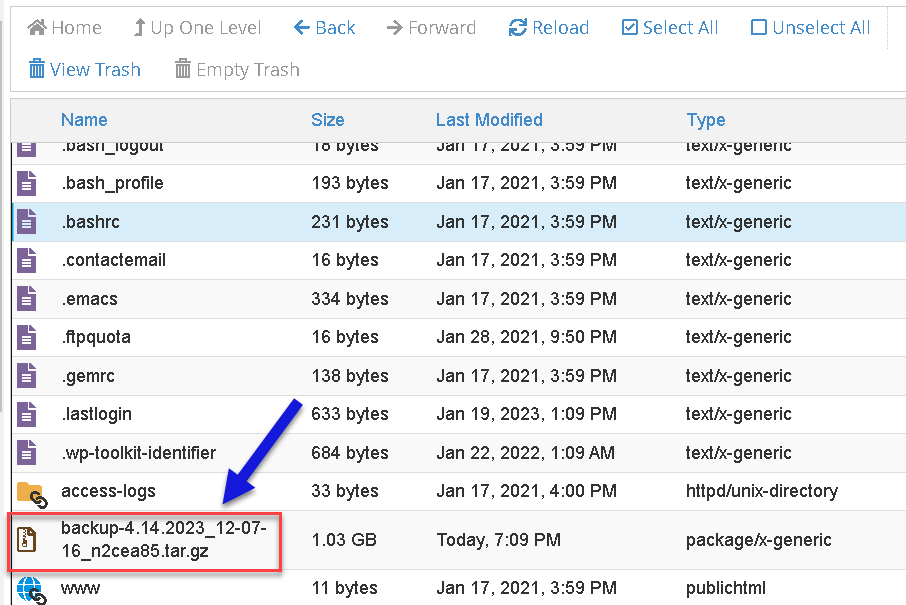

We built the code run it and it managed to create the backup:

We now possess a foundation that enables us to further develop a comprehensive application, significantly conserving both resources and time.

We are confident that Chat GPT will greatly enhance our productivity, enabling us to create tools that were previously beyond our grasp.

As long as you know how to appropriately phrase the questions, you can fully utilize its potential. At least for this specific case.

Keep in touch, we will soon post the free app in our Freebies section.

New Browser Plug In feature: Definitely an improvement

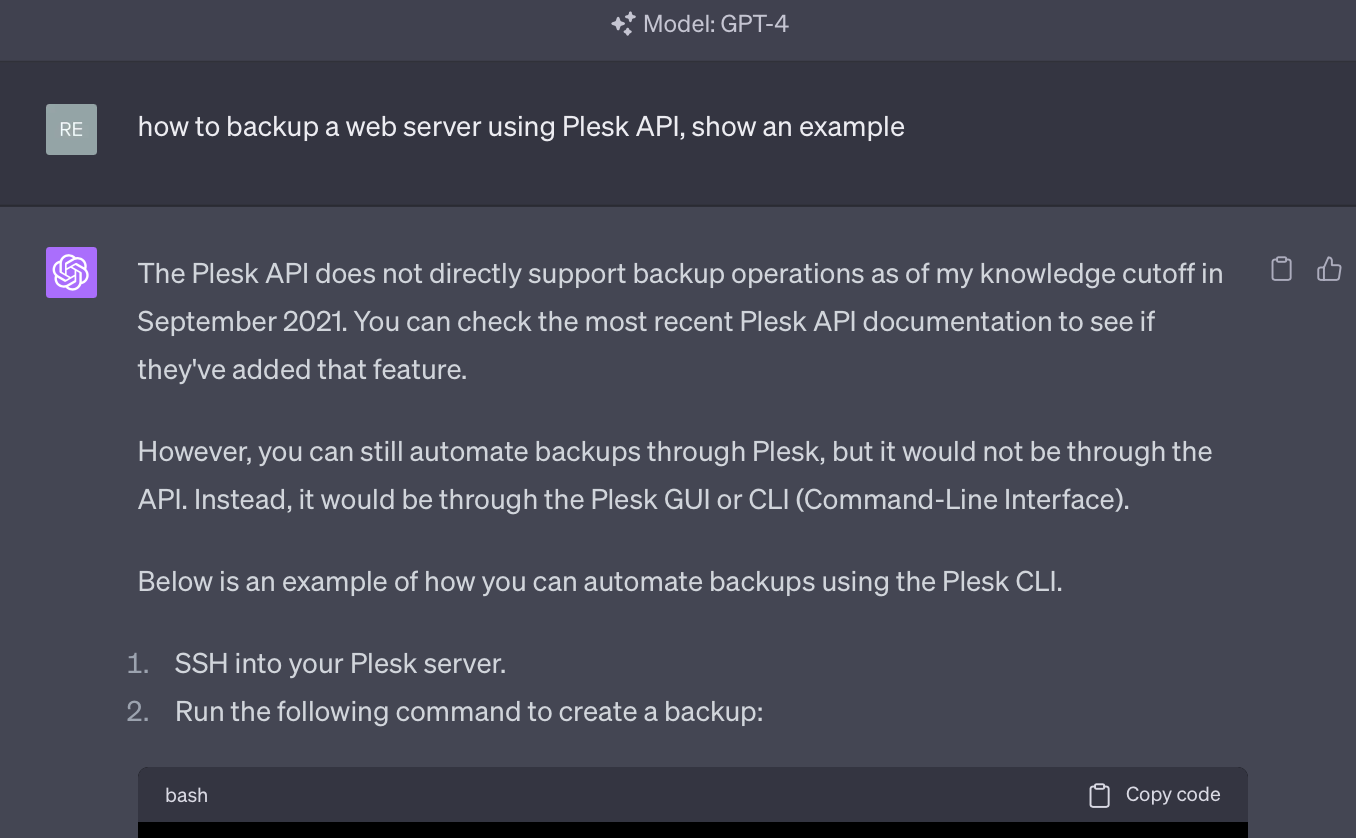

Searching for a solution on GPT4, does not suggest an updated solution:

Where using the Web Browsing Plug In, GPT4 comes up with a updated and improved solution: